AWS Auto Scaling in with CloudWatch CPU and Memory metrics using Go

AWS Auto Scaling in with CloudWatch CPU and Memory metrics using Go

In the last four years, I worked mostly with applications deployed directly on AWS EC2 instances, usually LEMP or LAMP stacks, with some Node.JS flavours in some cases. When it came to auto-scaling it was pretty easy to identify bottlenecks/weaknesses of our services, so I always set up CloudWatch Alarms based on either Memory or CPU metrics.

Now that I’m working with Kubernetes and Docker containers the rules of the game have changed drastically; how can you tell whether your Worker nodes should scale based on their CPU utilisation? And what about Memory? Or both? The worker nodes – in AWS – are just normal EC2 instances that aren’t aware of what happens inside them, they don’t care whether you’re running Nginx + PHP or 50 containers doing millions of things at the same time, that’s why you still have to use Amazon’s tools to scale them out and in accordingly.

For the first time ever, I set up four CloudWatch Alarms (two for the CPU and two for the Memory) and gave it a go. I started to stress my platform load-testing a couple of services with hey and waited…

Not quite the result I expected

As the load increased, and the Memory utilisation went beyond the threshold set previously, the CloudWatch Alarm executed the Auto Scaling policy. The cluster was joined by a new instance, which was the exact behaviour I expected, but, after 5 minutes, I noticed that the instance that it just created was getting shut down. According to CloudWatch, the Memory was somewhere between the High and Low utilisation thresholds so it shouldn’t have had triggered any action. CPU wasn’t though: the cluster was using ~30% of its CPU and it was set to scale back in whenever its utilisation was < 35%.

What does that mean? It means that CloudWatch and the Auto Scaling group aren’t aware of each other and of how many alarms are set, which is a fair point. So, whenever something similar happens, regardless of whether you’re scaling out or in, it’ll always apply a rule such this one:

if metric1 || metric2 {

// scale here

}I looked online, hoping to find a solution and change that || into a && but, the only thing I found was a 6 years old thread on the AWS forum with quite a few people not so happy about the moderators’ answers.

That’s when I decided to stop moaning about it, take some action and write a Go package that I’d then call Sigmund.

Sigmund is just a Shrink [for ASGs]!

It’s easy to get mad at Amazon sometimes because they didn’t write some features you truly need, although if you look at the bigger picture, they wrote so much more that gives you the power of doing, literally, whatever you want. We’re software engineers after all, right?

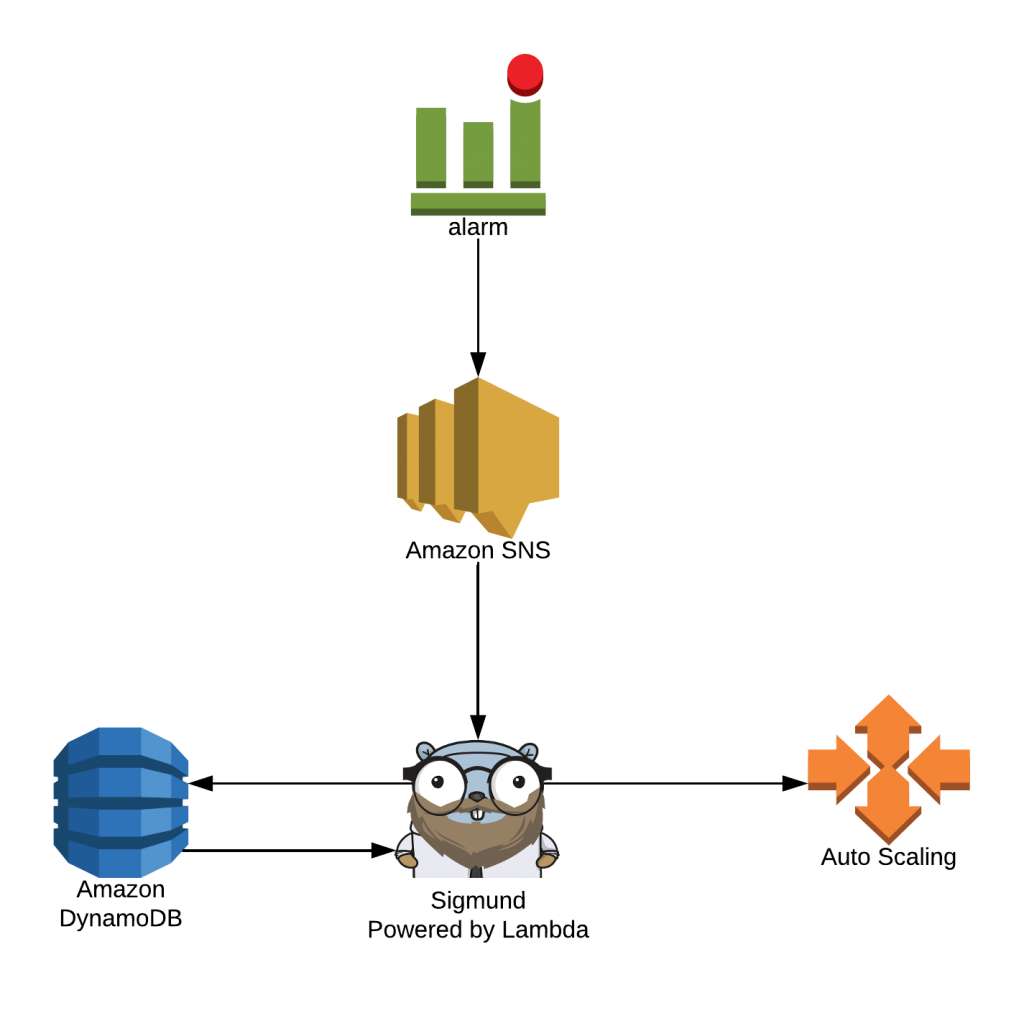

The diagram above shows Sigmund’s architecture and the way it operates with the Amazon’s Ecosystem; the key thing is that a CloudWatch Alarm triggers an SNS topic rather than an Auto Scaling policy directly. SNS would then trigger internally a Lambda function with Sigmund deployed on.

Sigmund reads and writes from and to a DyanamoDB saving the state in a table having two key items: isLowCPU and isLowMemory; as you can imagine they are Booleans and, depending on the topic that triggers Lambda, it changes the state in the table. When both items are true, it then uses an autoscaling package to execute a scale-in policy and, of course, resets both items in the table to false.

Let’s get technical

I define myself a Go Noob as I tried hard to start a new project using this language; I hadn’t ever found either the time or the right inspiration but this issue was particularly stimulating and gave me the right nudge and courage to go wild and start coding.

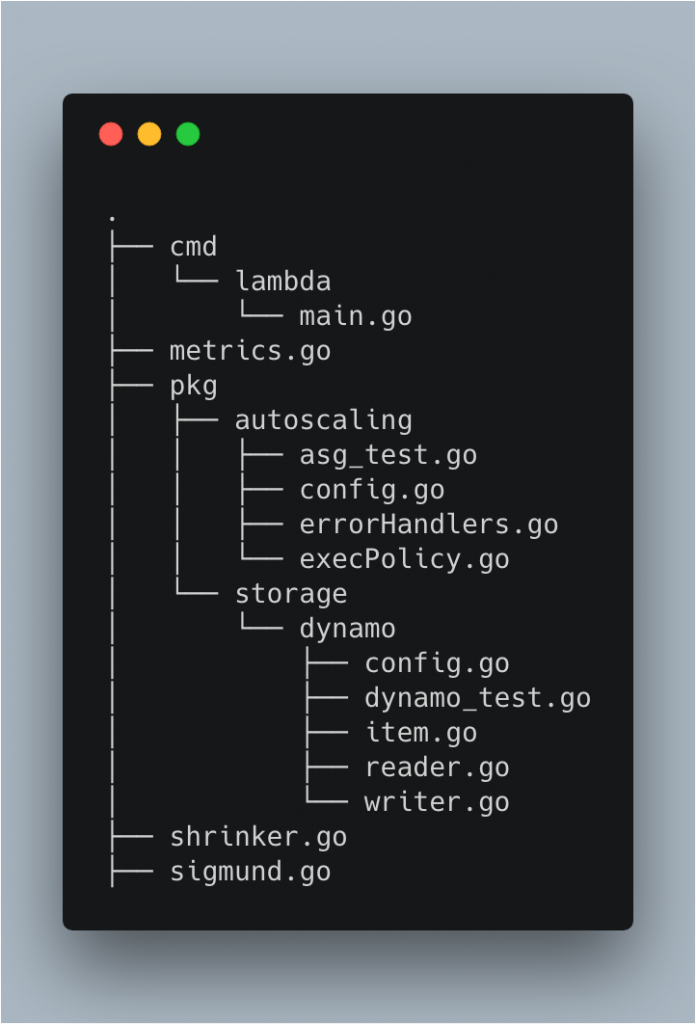

First things first, let’s take a look at the folder structure:

You can immediately notice that Sigmund comes with two packages, dynamo and autoscaling, which are the core components of this application. I decided to make them independent so it would be easier in the future to switch them with an alternative product. i.e. if I decided I don’t want to use DynamoDB anymore to save the state but use Redis instead, it’d be much easier to change the data store at the top level of the application without having to re-write the logic of the application itself. I won’t talk about the Autoscaling policy as it’s pretty straightforward, but we can spend some time looking at what the DynamoDB one looks like.

The first thing we should do with the DynamoDB package is to create a new client in order to establish a connection with the database. We first initialise the package with a construct I defined as New()

// New is the Package constructor that initialises

// the DynamoDB config

func New(tbl, region, key string) (*Dynamo, error) {

config, err := checkConfig(tbl, region, key) // checks if the parameters passed in are valid

if err != nil {

return nil, fmt.Errorf("Initialisation error: %v", err)

}

return config, nil

}Dynnamo is a custom type I defined which will be used to return to the user a DynamoDB client:

// NewClient creates a DynamoDB client

func (dynamo *Dynamo) NewClient() *Client {

var client Client

sess, err := newSession(dynamo.Region) // uses the AWS SDK to create a new session

if err != nil {

panic(fmt.Errorf("Cannot open a new AWS session: %v", err))

}

client.DynamoDB = dynamodb.New(sess)

return &client

}With a client initialised, we’re now ready to interact with the database, meaning we’re ready to read and write to it:

// WriteToTable executes an Update query on the DynamoDB

// updating the provided key with the isLow bool

func (client *Client) WriteToTable(table, key string, isLow bool) error {

input := dbUpdateItemInput(table, key, isLow)

_, err := client.UpdateItem(input) // executes a query using the AWS SDK

if err != nil {

return fmt.Errorf("Something went wrong while running the Update query: %v", err)

}

fmt.Printf("Successfully updated %v to '%v'\n", key, isLow)

return nil

}

// ReadFromTable executes a Select query on the DynamoDB

// database returning the output in an Item format

func (client *Client) ReadFromTable(table string) (*Item, error) {

var item Item

result, err := client.GetItem(&dynamodb.GetItemInput{

TableName: aws.String(table),

Key: map[string]*dynamodb.AttributeValue{

"ID": {

N: aws.String("0"),

},

},

})

if err != nil {

return nil, fmt.Errorf("Error getting item from DynamoDB: %v", err)

}

err = dynamodbattribute.UnmarshalMap(result.Item, &item)

if err != nil {

return nil, fmt.Errorf("Failed to unmarshal record: %v", err)

}

return &item, nil

}Finally, the Sigmund package will provide the logic used to decide whether the cluster has to be shrunk or not:

// Shrink is the core function of the package

// which executes an Autoscaling Group Policy

// when requirements are met

func (s *Sigmund) Shrink() error {

var item *DBItem

dbClient, err := s.newDBClient()

if err != nil {

return err

}

// Run a Select query

item, err = s.readDynamo(dbClient)

switch s.Dynamo.Key {

case "isLowCPU":

if !item.IsLowCPU {

err = dbClient.WriteToTable(s.Dynamo.TableName, s.Dynamo.Key, true)

if err != nil {

return err

}

item.IsLowCPU = true

}

case "isLowMemory":

if !item.IsLowCPU {

err = dbClient.WriteToTable(s.Dynamo.TableName, s.Dynamo.Key, true)

if err != nil {

return err

}

item.IsLowMemory = true

}

}

if item.IsLowCPU && item.IsLowMemory {

err = dbClient.WriteToTable(s.Dynamo.TableName, "isLowCPU", false)

if err != nil {

return err

}

err = dbClient.WriteToTable(s.Dynamo.TableName, "isLowMemory", false)

if err != nil {

return err

}

err = s.execASGPolicy()

if err != nil {

return err

}

}

return nil

}In a nutshell:

- Whenever the CPU utilization is below the minimum threshold, set

isLowCPU = true - Whenever the Memory utilization is below the minimum threshold, set

isLowMemory = true if isLowCPU && isLowMemory { s.execASGPolicy(); isLowCPU, isLowMemory = false, false }

Since we want to use it with Lambda, we should create binary simple enough to read and be modified anytime and should probably look like this:

var envVars = [6]string{

"REGION",

"ASG_NAME",

"POLICY_NAME",

"SNS_CPU",

"SNS_MEMORY",

"TABLE_NAME",

}

func init() {

for _, e := range envVars {

if os.Getenv(e) == "" {

panic(fmt.Errorf("ERROR: %s must be defined", e))

}

}

}

func main() {

lambda.Start(handler)

}

func handler(ctx context.Context, snsEvent events.SNSEvent) {

event := getSNSArn(snsEvent) // gets the SNS ARN from the body of the request

m, err := checkSNSArn(event) // checks whether the ARN is a valid one

throwError(err)

conf := populateConfig(m)

shrink(conf) // this triggers the Sigmund package

}Conclusions

Whilst working on this project, I learned a couple of lessons that I’d like to share with you all:

- Go is awesome, period! My main concern was “How do I structure my code?” but later it kinda came along by itself and started to make more and more sense. I’m sure a lot of expert Go developers would be horrified by just looking at it but, please, be patient with me, after all, I’m a Noob, as mentioned in my preface 🙂 On the matter, I really recommend you this repo by Kat Zień about Go standards;

- AWS ecosystem is powerful enough to allow you to find a way around some problems quite easily but, in my opinion, they should definitely pull their stuff together and be more community friendly and communicate more the state of their roadmaps, progress etc and shouldn’t wait 6 years before giving an anything-but-useful update on their forum;

- I also learned that my team at Avast respects my title and what the Dev in DevOps stands for. They understood the problem, acknowledged my solution and didn’t try to take it away from me assigning the task to a real developer, an upsetting thing that I was told in previous companies.

Furthermore, Sigmund is a pivotal project I used to present Go lang to a team made predominantly by PHP, Javascript and C++ developers and I hope it’ll be inspirational as much as it was for me when I looked at it for the very first time.

Edit: This package is now available here.